Description

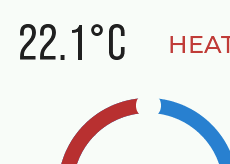

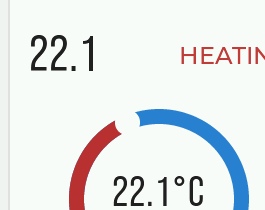

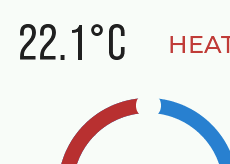

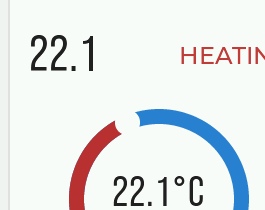

Sometimes the degrees C symbol won’t show in a label. I have it showing when the screen initialises so the font is definitely there.

lv_label_set_text(ui_TempSPLabel, “22.1°C”);

It also displays correctly if I do

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f%sC”, 22.1f, “°”);

later on in an event callback I can call the exact same function and the degrees C and the ‘C’ disappear.

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f%sC”, 22.1f, “°”);

it still disappears if I call lv_label_set_text(ui_TempSPLabel, “22.1°C”);

It was working and now it is not. It is hit and miss. Also sometimes the capital ‘C’ will be displayed but no degC symbol. If I include a symbol not supported in my font, I get a box displayed but I don’t get that when I include the degC symbol so I know my font is correct.

What MCU/Processor/Board and compiler are you using?

Windows 10 and ESP32

What LVGL version are you using?

8.3.6 and 8.2.0

What do you want to achieve?

Have it display the degrees C symbol

What have you tried so far?

Code to reproduce

Add a code snippet which can run in the simulator. It should contain only the relevant code that compiles without errors when separated from your main code base.

The code block(s) should be formatted like:

/*You code here*/

Screenshot and/or video

If possible, add screenshots and/or videos about the current state.

The source file where your event callback is located is a different one?

If it is a different one, what is the character encoding of this file?

There is more than one degree looking symbol that can be used but not all of them are available in the fonts that come with LVGL. If you are using a custom font you have to make sure the font you are using has the symbol.

On Windows if you press and hold the ALT key and on the numeric keypad type in 0176 while holding down ALT a degree symbol ° will appear. This specific symbol I know is available in the standard font that comes with LVGL.

I am not sure why you are using this as your way of setting the label.

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f%sC”, 22.1f, “°”)

would this not work?

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f°C”, 22.1f)

Also check carefully what you have the text alignment of the label widget and also the size of the widget. I ran into this issue with things getting truncated when I had the alignment of the text either centered or aligned using the left edge.

See if you can cause the problem to happen using only a single label and no other widgets a minimal example to reproduce the issue that you can post the code for so we can take a look at it.

The event callback file was ANSI and the setup UTF-8, but I changed both to UTF-8 and I got the same issue. I then cut and pasted from the setup file to the callback file and it now works.

It was working in the past and it stopped working. I think what you were saying about multiple symbols was correct, however I thought it was weird that if I explicitly put a symbol in that wasn’t supported in my font (‘x’ in my case) I would get a square box where the character was meant to be. I never got that and also the capital ‘C’ was also missing.

My original call was:

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f%cC”, val, 0xB0 );

and that never worked which was very odd as it is all ascii. I checked the font .c file and the following is in there:

/* U+00B0 “°” */

0x0, 0x3, 0x9c, 0xc9…

so I changed the call to:

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f%°C”, val );

which never worked so I ended up with:

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f%sC”, val, “°” );

which worked for a while.

I just tried

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f%cC”, val, 0xB0 );

again and that does NOT work. both files are UTF-8 and in this case I don’t think that even matters.

This also does not work:

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f%cC”, val, 0x00B0 );

nor this:

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f%lcC”, val, (wchar_t)0x00B0 );

0xB0 is not 0176 it is 176. LVGL does not have 176 it has 0176. They are identical glyphs but how they are encoded into the font file is different. When you want to use that symbol it is easier to use it literally and key it in by holding down your alt key and on the numeric pas type in 0176 then let go of the alt key. That will enter the proper literal for you.

0xB0 is NOT UTF-8. it is ASCII. The UTF-8 hex code is 0xC2B0 I think I am going to have to double check

I did some looking.

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f%cC”, val, 0xF8 );

That should work.

export.zip (11.9 KB)

I tried 0xF8 but that didn’t work. It is in ui_events.c. I understand what is happening now. The file is ui_events.c and is UTF-8 now and I am using the line

lv_label_set_text_fmt(ui_TestLabel, “%4.1f%sC”, val, “°”);

which works so all is good.

I explored further and no ascii for val > 127 will be displayed at all in the following

lv_label_set_text_fmt(ui_TestLabel, “%c”, val );

Anyway, I’ll use °

Also, as you can imagine, this does NOT work:

lv_label_set_text_fmt(ui_TestLabel, “%4.1f%cC”, val, ‘°’);

Just adding this here in case others read the thread.

I really do hate unicode, doesn’t matter what programming language you have to deal with it in, it always sucks.

No, unicode doesn’t suck

.

.

It’s more that all the previously used 8-bit encodings suck.

First was ASCII (7-bit encoding. Used the lower 7-bit of a byte).

Then ‘they’ introduced an 8-bit encoding (like ISO 8859-1 or Windows-1252) to also use the upper 7-bit of a byte).

But with 8-bit you can not match all the different languages which are existing.

You can make different 8-bit encodings (like ISO 8859-1, ISO 8859-2 etc.)

But you can not write text for different languages at the same time. Let’s say cyrillic text and western europe text at the same time/file. Not to speak of chinese, where you have some more glyphs than what can be mapped with 8-bit.

For that they invented UNICODE. Unicode is a 16-bit encoding. So you can map up to 65.536 symbols/characters.

The problem is now that all the computer infrastructure (editor, compiler etc.) was working with 8-bit characters.

So the utf-8 encoding was invented. Still 8-bit characters could be used, but all the characters which where > 127 where ‘escaped’ to use two ore more bytes.

There would be no problem at all, if the whole world would use just unicode (16-bit encoding).

But for historical reasons there are a lot of 8-bit sources. And utf-8 is a kind of workaround.

The nightmare when working with different encodings is to know what is the encoding, and from what encoding I need to convert to any other encoding.

For many IDEs/editors etc. it is hard to find out what is the current encoding of a file.

lvgl expects a utf-8 encoded file.

In utf-8 the ° is encoded into two bytes (0xc2 followed by 0xb0). From the two bytes (0xc2 and 0xb0) a 16-bit value (0x00b0) is calculated. This 16-bit value is now the offset into the font/glyph table to display the °.

One additional note:

If you have an 8-bit encoding (like ISO 8859-1) and you count the number of characters, it is the same as the number of bytes you need to hold the string.

But if you have an utf-8 encoding the number of character is not necessarily the number of bytes to the hold the string.

So if you have the ° in a string (array of char) in ISO 8859-1 you have one character and you need one byte for that character.

But if the ° is within a utf-8 encoded string you have still one character but you need two bytes for storing.

I know what it is but between file encoding, duplicated characters some being supported and other not supported and how compilers compile the code and so on and so forth it leads to a snafu of issues. supporting multiple languages in an application when different encodings are being used introduces all kinds of complexity and with complexity comes problems.

In _vsnprintf in lv_printf.c there is a cast to char from the return of va_arg:

case ‘c’ : {

…

out((char)va_arg(va, int), buffer, idx++, maxlen);

That’s why ‘c’ does not work.

As a hack I added:

if (flags & FLAGS_LONG) {

out(0xC2, buffer, idx++, maxlen);

}

right before the line:

out((char)va_arg(va, int), buffer, idx++, maxlen);

and that works for %lc. I have not looked at all the params and the length of buffer etc. so I would not use this code in production, it was just a test.

So with that, the following works:

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f%lcC”, val, ‘°’);

as well as:

lv_label_set_text_fmt(ui_TempSPLabel, “%4.1f%lcC”, val, 0xB0);

of course it would probably make the most sense if %lc also worked for 0xC2B0 as the last parameter but I haven’t really thought that through

Don’t try such poking around.

That is absolute b…

Again, in utf-8 a character can be a single byte, two bytes or three bytes.

And the first byte is not always 0xc2. It is 0xc2 for the °. See here utf-8.

Just don’t use the format specifier %c when using utf-8.

Although when the documentation say %c is printing a ‘single character’, that means a single ‘byte’.

Don’t try such poking around.

That is absolute b…

Did you miss where I said " it was just a test?" I was trying to understand where the limitation of the %c in the call was. Besides, the documentation does not specify anything about the parameters to the lv_label_set_text_fmt() function so I went looking. It all depends on what the author of the code wants. If they want %lc or %C to mean two bytes, three bytes, or anything else then there is no rule that says it MUST be the same as some old version of printf. It is a function in lvgl. It can be made to do whatever.

And the first byte is not always 0xc2

You must have missed where I said “As a hack I added:”

I didn’t claim that it was a cool addition to the code and would work for any encoding one desires. I also said “of course it would probably make the most sense if %lc also worked for 0xC2B0 as the last parameter”, meaning that if say %lc was for multi bytes, then that would be the way to specify it.

Although when the documentation say %c is printing a ‘single character’, that means a single ‘byte’.

Cool, I’ll get on a 16 bits per byte machine and it should work fine when I put 0xC2B0.

No, I did not miss that ‘it was just a test’.

Sorry if I stepped on your toes…

Sorry, I have seen a lot of code in my life, with a lot of excuses.

Of course you can do whatever you want with your code.

But take care if you update lvgl to new version.

I also said “of course it would probably make the most sense if %lc also worked for 0xC2B0 as the last parameter”, meaning that if say %lc was for multi bytes, then that would be the way to specify it.

Sorry, I do not think that it would make sense. What would you do if the utf-8 consist of three bytes?

Should the caller be responsible for setting the correct specifier (%c or %lc) depending whether the utf-8 character consists of one byte or two bytes?

Cool, I’ll get on a 16 bits per byte machine and it should work fine when I put 0xC2B0.

Hmm, I don’t know what you want to say.

Sorry if I stepped on your toes…

Haha, all good.

Sorry, I do not think that it would make sense. What would you do if the utf-8 consist of three bytes?

Should the caller be responsible for setting the correct specifier (%c or %lc) depending whether the utf-8 character consists of one byte or two bytes?

Yes the caller should be responsible for specifying how many bytes. If I was going to put it in, then probably something %c %lc %llc or %c %2c %3c. I’m not saying it should go in, I was just looking at the code to see why it was not working for me.

Hmm, I don’t know what you want to say.

Just that there are machines where a byte is not 8 bits. If I got on a 16bit per byte machine, I’d probably expect 0xC2B0 to work.

Hi, All. I know this was already considered resolved, but I just ran into the same issue (thank you for posting this) and I found another solution that is working reliably:

sprintf(buffer, "%d °C", degrees);

This embeds the 0xC2 in the string BEFORE the 0xB0, and so far it has been working nicely both in the constructor and updates.

Note: I created the custom font with these ranges: 0x20-0x7F,0xB0 and in my font file [ASCII encoded], it lists the character like this:

/* U+00B0 "°" */

0xf, 0x83, 0x4a, 0x20, ...

That was my hint to try simply copy/pasting that string into my sprintf format string.

Hope that helps.

Kind regards,

Vic

Postscript: Even more reliable (where you don’t have to worry about the file encoding) is this:

sprintf(buffer, "%d " "\xC2\xB0" "C", degrees);

(The “C” at the end has to be separate, otherwise the compiler will try to interpret “…\B0C” as a 3-digit hex value.) The compiler (for C programmers not already familiar with this) joins the 3 as a single NUL-terminated string.