Hi Marouane,

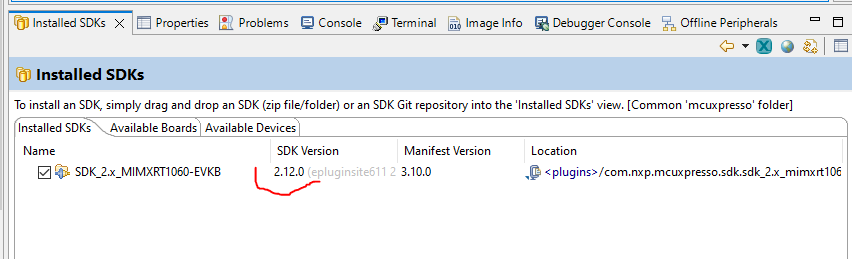

Thank you for all the info, looking through everything, this issue appears to be similar to this one.

So I believe the NXP drivers for the IMXRT1060-EVKB board need to be updated to work in a different way for Version 8 onwards… They currently seem to be configured with direct mode disabled, full refresh using full screen double buffers. I would suggest they should run with full refresh disabled, direct mode enabled, full screen double buffered.

I have posted my code which I used to fix this issue on my own hardware platform after migrating from version 7 to version 8 of LVGL on the linked post but there hasn’t been any response from @gianlucacornacchia to mention if it was useful or not.

Here is an example of a potentially modified lvgl_support.c (15.4 KB) from the latest MCUXpresso IDE v11.6.0_8187.(Please bare in mind I can’t test this as I have no hardware so hopefully this will just help you get on the right track to solve the issue!) The required changes are hopefully as follows:

void lv_port_disp_init(void)

{

static lv_disp_draw_buf_t disp_buf;

lv_disp_draw_buf_init(&disp_buf, s_frameBuffer[0], s_frameBuffer[1], LCD_WIDTH * LCD_HEIGHT);

/*-------------------------

* Initialize your display

* -----------------------*/

DEMO_InitLcd();

/*-----------------------------------

* Register the display in LittlevGL

*----------------------------------*/

lv_disp_drv_init(&disp_drv); /*Basic initialization*/

/*Set up the functions to access to your display*/

/*Set the resolution of the display*/

disp_drv.hor_res = LCD_WIDTH;

disp_drv.ver_res = LCD_HEIGHT;

/*Used to copy the buffer's content to the display*/

disp_drv.flush_cb = DEMO_FlushDisplay;

disp_drv.wait_cb = DEMO_WaitFlush;

#if LV_USE_GPU_NXP_PXP

disp_drv.clean_dcache_cb = DEMO_CleanInvalidateCache;

#endif

/*Set a display buffer*/

disp_drv.draw_buf = &disp_buf;

/* Partial refresh */

disp_drv.full_refresh = 0; /* CHANGE 1 */

disp_drv.direct_mode = 1; /* CHANGE 2 */

/*Finally register the driver*/

lv_disp_drv_register(&disp_drv);

}

You will need a new function to update the second full screen buffer when the buffers are being flipped by the driver something like this:

/*CHANGE 3*/

static void DEMO_UpdateDualBufffer( lv_disp_drv_t *disp_drv, const lv_area_t *area, lv_color_t *colour_p )

{

lv_disp_t* disp = _lv_refr_get_disp_refreshing();

lv_coord_t y, hres = lv_disp_get_hor_res(disp);

uint16_t a;

lv_color_t *buf_cpy;

if( colour_p == disp_drv->draw_buf->buf1)

buf_cpy = disp_drv->draw_buf->buf2;

else

buf_cpy = disp_drv->draw_buf->buf1;

for(a = 0; a < disp->inv_p; a++) {

if(disp->inv_area_joined[a]) continue; /* Only copy areas which aren't part of another area */

lv_coord_t w = lv_area_get_width(&disp->inv_areas[a]);

for(y = disp->inv_areas[a].y1; y <= disp->inv_areas[a].y2 && y < disp_drv->ver_res; y++) {

memcpy(buf_cpy+(y * hres + disp->inv_areas[a].x1), colour_p+(y * hres + disp->inv_areas[a].x1), w * sizeof(lv_color_t));

}

}

}

The flush function will need to check and decide when to update the second buffer maybe something like this:

static void DEMO_FlushDisplay(lv_disp_drv_t *disp_drv, const lv_area_t *area, lv_color_t *color_p)

{

#if defined(SDK_OS_FREE_RTOS)

/*

* Before new frame flushing, clear previous frame flush done status.

*/

(void)xSemaphoreTake(s_frameSema, 0);

#endif

/*CHANGE 4*/

if( lv_disp_flush_is_last( disp_drv ) ) {

DCACHE_CleanInvalidateByRange((uint32_t)color_p, DEMO_FB_SIZE);

ELCDIF_SetNextBufferAddr(LCDIF, (uint32_t)color_p);

DEMO_UpdateDualBuffer(disp_drv, area, color_p);

s_framePending = true;

}

}

I have no idea if this code will work but please give it a try hopefully if it doesn’t this is enough of a head start for you to be able to debug it on some real hardware

A further enhancement once this is working would to restructure the code so LVGL doesn’t ever get blocked by the semaphore, currently I don’t know how often or how long the code blocks on the semaphore in reality as I have no hardware to test, I also don’t know enough about the NXP hardware to speculate at this time the best way to achieve this. An early iteration of my driver also used a semaphore and it proved to introduce a delay in the execution path and ultimately I managed to engineer the use of the semaphore out using a simple flag and not blocking the execution.

Let me know how you get on.

Kind Regards,

Pete

I have created projects with both individual global objects for all components and also created my own global structures, having done both I prefer the structures approach as it can be built in the same logical order as the layout of the real GUI which I find easier to remember.

I have created projects with both individual global objects for all components and also created my own global structures, having done both I prefer the structures approach as it can be built in the same logical order as the layout of the real GUI which I find easier to remember. lol)

lol) not good you are experiencing a crash

not good you are experiencing a crash  It might be worth enabling asserts and logging for LVGL to help track down that issue?

It might be worth enabling asserts and logging for LVGL to help track down that issue?