- STM32 Cortex M0 on a proprietary board with 2.8" display

- LVGL v. 9.2 and 8.4 has been tried.

- No OS

- I want to achieve a working UI with touch functionality but without need for fancy graphics.

- I’ve done extensive hardware debugging (~30h) trying to figure out where the problem is. Allocated LV_MEM is more than enough (25% usage, ~1% frag).

- Tried with LV_MEM_CUSTOM = 1, HEAP size put to 0x2000 - no luck.

Issue is that the processor goes to Hardfault_handler without any way to anticipate it, even with the simplest Hello World example. Depending on the situation, it either crashes after 10 seconds or after 5 minutes or everything between, also even if I just initialize the LVGL without any content being drawn, it won’t stay alive for long. I’ve also done extensive content drawing using RLE compressed images and can get everything to work, but the issue is still the stability. I’ve checked that LV_MEM does not overflow, allocated buffers won’t overflow, plenty of STACK mem, LVGL traces and asserts have been enabled, LV_MEM_JUNK also defined but yet without any hint or root cause. I’ve run the lv_timer_handler alone in the main loop and it still does this. I’ve also commented lv_timer_handler away without any luck. If I remove lv related code, the product works flawlessly.

LV_MEM_SIZE (8U * 1024U)

__attribute__((aligned(4))) static lv_color_t buf_1[320 * 10];

__attribute__((aligned(4))) static lv_color_t buf_2[320 * 10];

static lv_disp_t * disp;

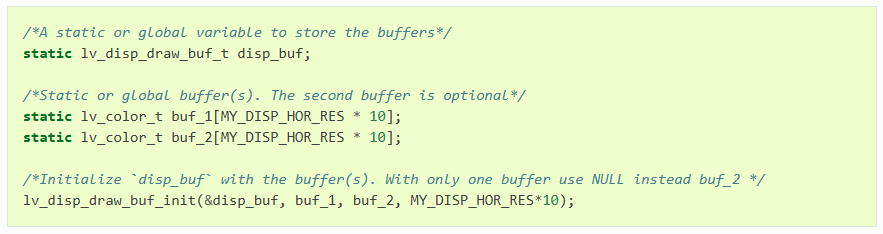

static lv_disp_draw_buf_t disp_buf;

static lv_disp_drv_t disp_drv;

lv_init();

lv_disp_draw_buf_init(&disp_buf, buf_1, buf_2, 320*10);

lv_disp_drv_init(&disp_drv);

disp_drv.draw_buf = &disp_buf; /*Set an initialized buffer*/

disp_drv.flush_cb = my_flush_cb; /*Set a flush callback to draw to the display*/

disp_drv.hor_res = 320; /*Set the horizontal resolution in pixels*/

disp_drv.ver_res = 240; /*Set the vertical resolution in pixels*/

disp = lv_disp_drv_register(&disp_drv); /*Register the driver and save the created display objects*/

lv_example_hello_world();

while(1)

{

lv_timer_handler_run_in_period(5); //lv_tick_inc(1) called properly in timer interrupt.

}

I’ve run my UI in windows simulator without any issues. 90% of the issues people have had related to LV_MEM size being too small, but I’m 99% sure this is not the case here.