Description

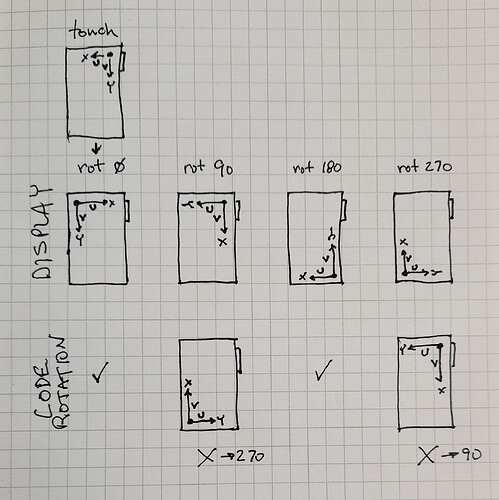

My input device callback returns touchscreen coordinates that work for the rotation 0 and 180 degree cases. For the 90 and 270 degree cases I’m seeing that lv_indev.c:indev_pointer_proc() is rotating those coordinates in the opposite direction from the rotation applied to the display. This, of course, causes a disconnect between the touchscreen and the display. I’ve been able to compensate for and counteract the ‘errant’ rotation in those two cases to obtain correct results. But I’m interested in understanding why this is happening as it may indicate a more serious problem.

What MCU/Processor/Board and compiler are you using?

VS Code

PlatformIO

platform = espressif32

board = adafruit_feather_esp32_v2

framework = arduino

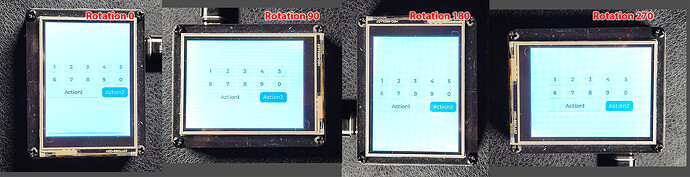

Adafruit TFT FeatherWing

- 2.4" 320x240 ILI9341 display

- STMPE811 touchscreen controller

What LVGL version are you using?

lvgl/lvgl @ 9.2.2

Bodmer/TFT_eSPI @ 2.5.43

What do you want to achieve?

My touch input read callback returns valid coordinates for rotation 0 that work fine. It’s my understanding that LVGL should rotate these properly to match other display rotations. I’m having to compensate for the rotation that will be applied for the 90 and 270 (landscape) cases as it seems to rotate the wrong direction. I’d like to understand why. Perhaps TFT_eSPI isn’t applying the proper display rotation? But that relationship lies in its ties within the LVGL library. Perhaps there’s some additional configuration setting I’m missing to sync these up?

What have you tried so far?

Calibration is fine.

Compensating for the ‘errant’ rotation has it working, but is there an underlying unknown issue?

Code to reproduce

This is the code for the input device callback:

/*Read the touchpad*/

void my_touchpad_read( lv_indev_t * indev, lv_indev_data_t * data )

{

lv_display_t * disp = lv_indev_get_display(indev);

// active rotation

auto rot = lv_display_get_rotation(disp);

// res changes with rotation

auto hor_res = lv_display_get_horizontal_resolution(disp);

auto ver_res = lv_display_get_vertical_resolution(disp);

bool touched = !ts.bufferEmpty();

if(!touched)

{

data->state = LV_INDEV_STATE_RELEASED;

if (!wasPressed)

return;

wasPressed = false;

}

else

{

TS_Point p = ts.getPoint();

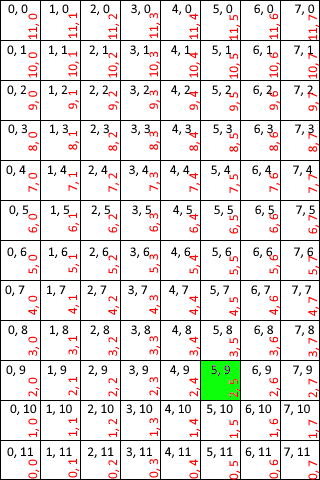

/*

TFT_WIDTH and TFT_HEIGHT are the default portrait dimensions, 240x320

When the USB port is at top right, portrait, the TS origin is at top right,

with X left along the short side and Y down along the long side.

Scale input TS coordintes using the calibration #'s.

NOTE:

The LV_DISPLAY_ROTATION_0 case is apparently the coordinate system we should use

for the return coordinates.

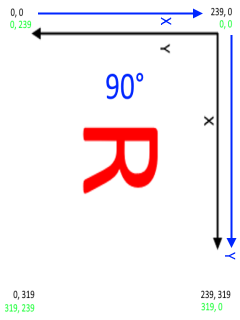

Here is the transform LVGL applies to the coordinates in lv_indev.c:indev_pointer_proc() after

calling us:

if(disp->rotation == LV_DISPLAY_ROTATION_180 || disp->rotation == LV_DISPLAY_ROTATION_270) {

data->point.x = disp->hor_res - data->point.x - 1;

data->point.y = disp->ver_res - data->point.y - 1;

}

if(disp->rotation == LV_DISPLAY_ROTATION_90 || disp->rotation == LV_DISPLAY_ROTATION_270) {

int32_t tmp = data->point.y;

data->point.y = data->point.x;

data->point.x = disp->ver_res - tmp - 1;

}

The second part rotates the touch point 90 degrees, but in the opposite direction from the display.

Either this LVGL rotation or what's being done in TGT_eSPI to rotate the display must be 'wrong'.

*/

int16_t x = 0, y = 0;

switch (rot)

{

case LV_DISPLAY_ROTATION_0: // portrait (USB top right)

case LV_DISPLAY_ROTATION_180: // portrait (USB bottom left)

// flip the X axis for conversion from the TS system to the ROT_0 system

// this works for the ROT_180 case too (without the 'wrong' 90 degree rotation)

x = map(p.x, calib[0], calib[1], TFT_WIDTH-1, 0);

y = map(p.y, calib[2], calib[3], 0, TFT_HEIGHT-1);

break;

case LV_DISPLAY_ROTATION_90: // landscape (USB top left)

case LV_DISPLAY_ROTATION_270: // landscape (USB bottom right)

// this compensates for the 'wrong' 90 degree rotation

x = map(p.x, calib[0], calib[1], 0, TFT_WIDTH-1);

y = map(p.y, calib[2], calib[3], TFT_HEIGHT-1, 0);

break;

default:

Serial.print("ROT NOT IMPLEMENTED");

break;

}

data->state = LV_INDEV_STATE_PRESSED;

data->point.x = constrain(x, 0, TFT_WIDTH-1);

data->point.y = constrain(y, 0, TFT_HEIGHT-1);

wasPressed = true;

}

Serial.print("rot: ");

Serial.print(rot);

Serial.print(" [");

Serial.print(hor_res);

Serial.print("x");

Serial.print(ver_res);

Serial.print("] ");

Serial.print("{");

Serial.print(data->point.x); Serial.print(",");

Serial.print(data->point.y);

Serial.print("} ");

Serial.println(data->state);

}