I’m using RK35XX series cpu with LVGL V9.2 on Linux, RK3576 & RK3562 to be specific.

I am trying to find a way to accelerate the 2D rendering with the embedded Mali-G52 GPU, but I can’t find how.

It seems a little strange to me that the ST DMA2D can get HW acceleration, but the more powerful GPU can’t. So I guess there must be a way to achieve this, right?

I haven’t found any sample implementations for the Mali GPU…Should I refer to the “VG-Lite General GPU” section of the documentation?

Any help would be appreciated. Thanks!

What display backends are you using?

I’m creating a project that uses LVGL on a Radxa Zero 3E (RK3566). It runs Weston (Wayland), and Weston uses libdrm to, theoretically, access the GPU.

I need to find a way to analyse the GPU usage…

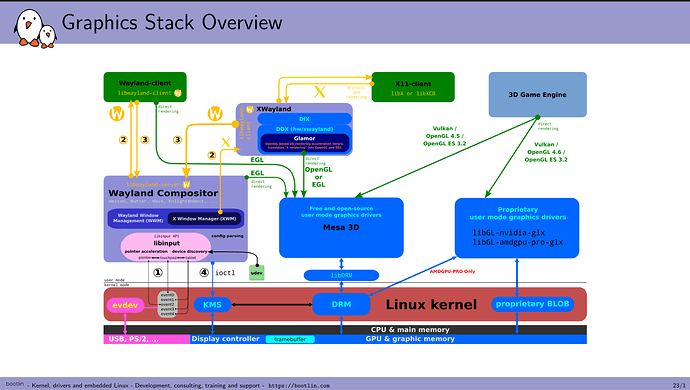

image from

bootlin training

What kind of UI are you trying to build with LVGL?

Thank you.

Please correct me if I’m wrong, based on the block diagram you provided, it seems that Wayland needs intermediate layers like OpenGL or Vulkan, and then utilizes the GPU through libdrm? However, it appears that LVGL does not yet have a complete support for OpenGL?

For performance reasons, I have chosen not to use any desktop environment on the target device I’m currently working on. The alternative I’m considering is using the Linux framebuffer or libdrm (without desktop).

You can add a new renderer to LVGL. You can look at the current renderers that are included with LVGL for reference. You would need to write the code to use your specific 2D accelerator.

I recommend not using framebuffer but instead DRM, Wayland, or even SDL.

It’s an old interface and it’s not very performant.

If you don’t want to write code for a new renderer, I recommend using DRM directly or something with a display server.

If I’m not mistaken, Weston accesses libdrm directly, you just need to specify it in weston.ini. Weston is lightweight.

You can use lv_port_linux to easily change the backend — you just need to modify lv_conf.h and recompile.