It does make a difference but in a round about kind of a way. the DMA buffer is only so large and it is not large enough to fit the entire amound of data being sent into a single “transaction” so what happens is while the transferring is non blocking the loading of data into the next transaction is not. The CPU is required to do that and that code is executed inside of an ISR context. so when you are sending data to multiple displays like you want to do you are going to end up having a heck of a lot of ISR’s occurring. ISR’s are actually quite expensive because of the time needed to save the current CPU state, load the code that is to run in the ISR context and then run that code and to load the saved CPU state and then start running that state again. Because the ESP32-S3 you are using is dual cores that means that you can move the ISR’s to the other core so LVGL will not get interrupted while it is running. To do this the display drivers need to be started and the buffer data passed to those drivers on the other core. It does make a difference.

You can still push ESP NOW over to that core as well as a munch of other things. You will need to get your tack management correct and passing buffers between the running tasks correct. You cannot let a task simply sit there and spin it’s wheels, you have to properly yield so that other tasks will have the ability to run. The trick is to keep the loops from taking a long time to run. so breaking what needs to be done into chunks is always best if what needs to be done is a larger amount of work.

Stop using the Arduino SDK. It doesn’t use a complete version of the ESP-IDF and it has a very large amount of wrapper code that make code execution slower. My suggestion is to use the build system that is built into the ESP-IDF. It is not that hard to use but it does take a while to get a handle on how to use it. Having someone explain what to do makes it really easy. The documentation is not the clearest and it could be better which is why it helps if someone is able to lend a hand.

LovyanGFX is not a driver. It is a rendering framework. It is written in a manner that allows it to render. so when you are writing a bitmap to what you believe is the display you are actually not. the bitmap which is actually LVGL frame buffer data gets copied to another buffer \ and that is the buffer that gets use to send the data to the display. That copying doesn’t need to happen and it slows things down big time.

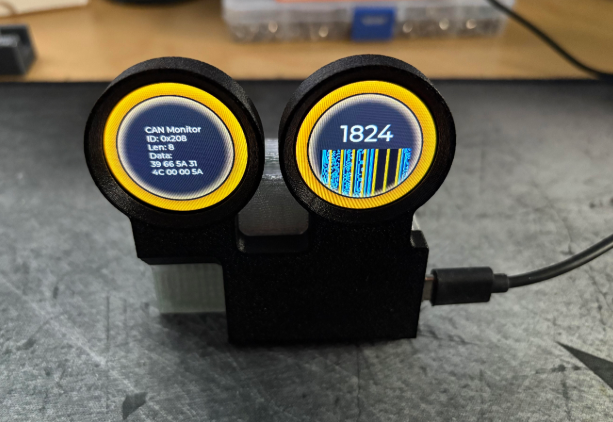

You have the ability to create multiple displays in LVGL. or you can do some kung fu in your flush function to handle writing the data to both displays using a single “display” in LVGL. If you want to go the route of using a single display you would set the resolution of the LVGL display to have a width of 480 and the data that is written to anything below 240 would go to one display and the data that is written to the other would go to the second display.

There are some tricks you can use if you want to utilize 2 LVGL displays. Setting the user data for the display is an important one. What you pass as the user data is the reference to the display driver so when you need to pass the buffer that has been rendered you are automatically going to be passing it to the correct display driver because the handle is going to be attached to that specific LVGL display. You will only need a single flush function to handle it. You only need to create a single task to handle both display drivers that runs on the second core. there is no need to have 2 tasks to do that work.

This can get quite technical so I will do my best to explain it.

The SPIRAM and flash use the same SPI bus. so that’s #1. So you are only going to be able to speed things as as fast as the slowest item attached to that bus.

With the 2MB SPIRAM there is only 4 lanes connected to it. Same goes for the flash. So lets looks at some therotical numbers… 4 lanes running at 80mhz means you are able to transfer 320,000,000 bits per second. Now this might seem like it is fast but watch this. First lets turn it into bytes so divide that by 8 and you get 40,000,000. Now remember you have reads and writes occuring at the same time so divide that in 1/2. Now you are at 20,000. Oh oh there are 2 devices connected to the bus, the flash AND the ram so divide that in 1/2 once again… Now you are down to 10,000. see how fast that number disappeared?

so most times read and write operations to the SPIRAM is going to occur at this speeds especially when using DMA transfers. This happens because the cal to send the buffer data is non blocking so the flash is going to be accessed to load the code that needs to be run. in a blocking call you would get better transfer speed but you loose the ability to render at the same time the data is being transferred.

If you bump up to the version that has octal SPIRAM but the flash is still using a quad SPI connection you are only going to be able to tweak the SPI to what the slowest device is going to be able to handle. You will have the ability to overclosk to 120mHz but you will not have the ability to use DTR mode. so the best you will get for speed is going to be 120mHz. Remember the bus sharing between the SPIRAM and the flash. well that quad flash is going to take a while to load code. that ends up becoming the bottle neck. Only one device is able to access the bus at a given time. so while the code is being read from flash access to the SPIRAM stops happening. There are several ways to handle this and one of them is o load all of the code in flash into SPIRAM when the ESP32-S3 boots up. This is going to eat up quite a bit of SPIRAM when this is done so if you have a lot of code that gets compiled then you are going to see your SPIRAM availability drop equal to the size of the compiled binary.

If you get the S3 that has octal SPIRAM and also has octal flash you also remove that bottle neck and you also get to double the effective SPI Bus clock sped by turning on DTR. so instead of running at 120mHz the bus has an effective clock rate of 240mHz. Lets see what those numbers look like.

8 lanes * 240000000 = 1,920,000,000 / 8bits = 240,000,000 bytes * 0.5 * 0.5 = 60,000,000…

6 times faster than what is gotten from the quad SPI. When you use that compared to what a display has this is what it looks like.

Single display on a quad SPI bus running at 80mHz

4 * 80000000 / 8 = 40,000,000 bytes

instead of being lower than what the display is able to handle for a transmission speed which is not good because you are only going to be able to feed the data to the display as fast as you are able to read the data from memory. so being under that number means that your transfer speeds will be lower because of the bottleneck at the SPIRAM. Having the displays attached to the same SPI bus is going to slow things down a lot. The maximum transfer speed will be cut in half. My suggestion is to have a separate bus for each display, it is going to put you upside down again but having a max transfer of 30,000,000 to each display is going to be faster than having a max speed of 20,000,000 to each display. and that max is going to be effected by whether or not LVGL is actually rendering to a buffer or not and if code is being loaded from flash. where as having the 2 displays attached to the same bus is not going to be able to get any better than the 20,000,000 per display no matter what is going on with the ESP32S3. so if there is no access tasking place to the flash and LVGL happens to not be rendering to a buffer your SPIRAM access speed when transferring just jumped up to 120,000,000 which means you will be able to max out the transfer speed of 40,000,000 on each bus and still have some room left over with accessing the SPIRAM.

These are theoretical numbers which you won’t actually see and it’s intended to give an understanding of the mechanics taking place at the hardware level.