So, what I’ve done so far is:

Updraded to 7.10.0.

Made no difference.

Increassing buffer size after tip from embeddedt.

Made HUGE difference! Even without DMA.

Enabled DMA to transfer data in flush_cb.

Makes noticeable difference but corrupts the data. I also get a DMA transmission error.

Enabled lv_gpu_stm32_dma2d_init.

Also makes noticeable difference, but background color isn’t drawn and invisible objects only gets erased where there’s other objects, so popups etc leaves “residue” on the screen.

The relevant parts of the code now looks like this:

#define DISP_BUF_SIZE (LV_HOR_RES_MAX * LV_VER_RES_MAX)

#define USE_DMA (1)

#if USE_DMA

static DMA_HandleTypeDef pixel_dma;

#endif

static void system_display_lv_flush(lv_disp_drv_t *disp, const lv_area_t *area,

lv_color_t *color_p) {

#if USE_DMA

int width = 1 + area->x2 - area->x1;

for (uint16_t y = area->y1; y <= area->y2; y++) {

uint32_t FBIndex = (uint32_t)(drv_framebuffer_get_ptr() + y * LV_HOR_RES_MAX + area->x1);

// Start the DMA transfer using polling mode

HAL_StatusTypeDef hal_status =

HAL_DMA_Start(&pixel_dma, (uint32_t)color_p, FBIndex, width);

if (hal_status != HAL_OK) {

system_fault_panic("HAL_DMA_Start falied",

(hal_status << 16) | pixel_dma.ErrorCode);

}

hal_status = HAL_DMA_PollForTransfer(&pixel_dma, HAL_DMA_FULL_TRANSFER, 1000);

#if 0

// This keeps panicing so we definitely have problems with DMA.

if (hal_status != HAL_OK) {

system_fault_panic(

"HAL_DMA_PollForTransfer falied",

(hal_status << 16) | pixel_dma.ErrorCode);

}

#endif

color_p += width;

#else

for (uint16_t y = area->y1; y <= area->y2; y++) {

for (uint16_t x = area->x1; x <= area->x2; x++) {

drv_framebuffer_set_pixel(x, y, color_p->full);

color_p++;

}

#endif

}

lv_disp_flush_ready(disp);

}

void system_display_create() {

lv_init();

#if LV_USE_GPU_STM32_DMA2D

lv_gpu_stm32_dma2d_init();

#endif

#if USE_DMA

__HAL_RCC_DMA2_CLK_ENABLE();

NVIC_ClearPendingIRQ(DMA2_Stream0_IRQn);

HAL_NVIC_DisableIRQ(DMA2_Stream0_IRQn); // DMA IRQ Disable

// Configure DMA request pixel_dma on DMA2_Stream0

pixel_dma.Instance = DMA2_Stream0;

pixel_dma.Init.Channel = DMA_CHANNEL_0;

pixel_dma.Init.Direction = DMA_MEMORY_TO_MEMORY;

pixel_dma.Init.PeriphInc = DMA_PINC_ENABLE;

pixel_dma.Init.MemInc = DMA_MINC_ENABLE;

pixel_dma.Init.PeriphDataAlignment = DMA_PDATAALIGN_WORD;

pixel_dma.Init.MemDataAlignment = DMA_MDATAALIGN_WORD;

pixel_dma.Init.Mode = DMA_NORMAL;

pixel_dma.Init.Priority = DMA_PRIORITY_LOW;

pixel_dma.Init.FIFOMode = DMA_FIFOMODE_ENABLE;

pixel_dma.Init.FIFOThreshold = DMA_FIFO_THRESHOLD_FULL;

pixel_dma.Init.MemBurst = DMA_MBURST_SINGLE;

pixel_dma.Init.PeriphBurst = DMA_PBURST_SINGLE;

HAL_StatusTypeDef hal_status = HAL_DMA_Init(&pixel_dma);

if (hal_status != HAL_OK) {

system_fault_panic("HAL_DMA_Init falied",

(hal_status << 16) | pixel_dma.ErrorCode);

}

#endif

static lv_disp_buf_t disp_buf;

lv_color_t *buf = system_sdram_alloc_top(DISP_BUF_SIZE * sizeof(lv_color_t));

memset(buf, 0, DISP_BUF_SIZE * sizeof(lv_color_t));

lv_disp_buf_init(&disp_buf, buf, NULL, DISP_BUF_SIZE);

static lv_disp_drv_t disp_drv;

lv_disp_drv_init(&disp_drv);

disp_drv.hor_res = LV_HOR_RES_MAX;

disp_drv.ver_res = LV_VER_RES_MAX;

disp_drv.flush_cb = system_display_lv_flush;

disp_drv.buffer = &disp_buf;

lv_disp_drv_register(&disp_drv);

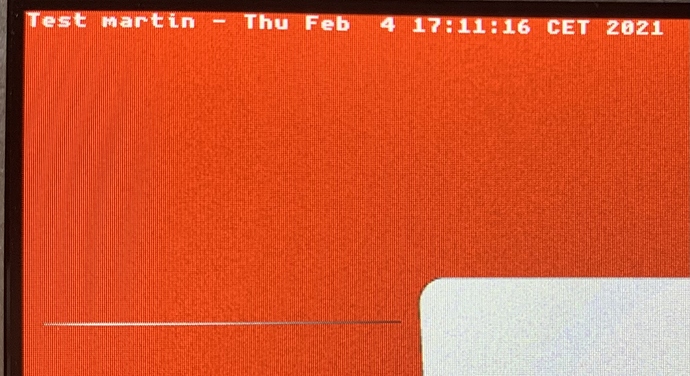

This is how it looks with both DMAs turned off:

Graphics is a bit slow but everything gets drawn correctly.

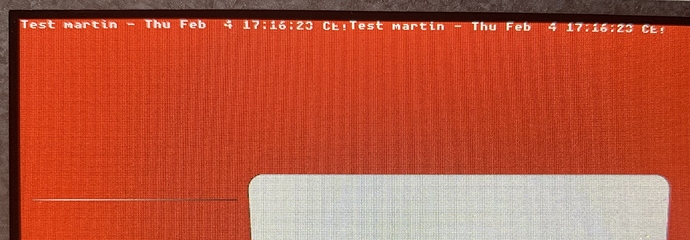

This is with only USE_DMA enabled:

Some pixels in the text is missing and there’s other weird artefacts like that parts of graphics/text is drawn as an extra copy in the wrong place (like the text here).

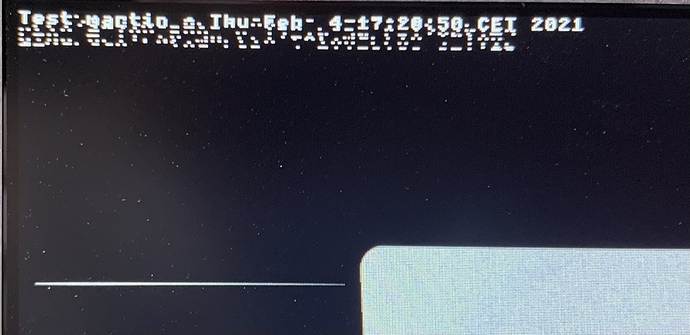

This is with only LV_USE_GPU_STM32_DMA2D enabled:

Background is never drawn (probably why popups are leaving residue) and there’s a weird digital noise in the upper left corner of the screen. That digital noise is only visible when LV_USE_GPU_STM32_DMA2D

is enabled, it’s completely gone when I disable it.

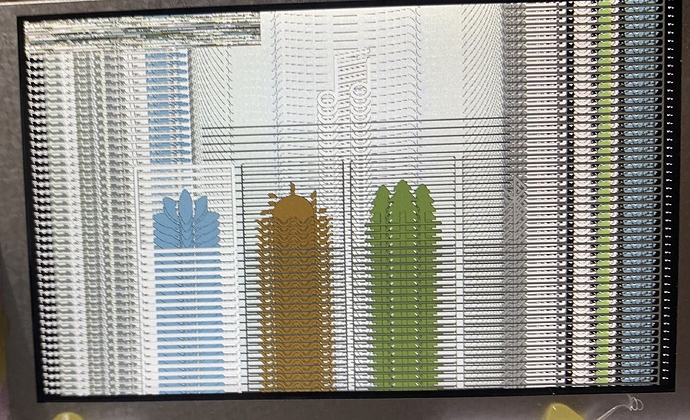

With USE_DMA and LV_USE_GPU_STM32_DMA2D active the graphics is IMPRESSIVELY fast, so I’d really want to get this working.

Any tip or suggestion is welcome.

and the background color is still black instead of red, but the repetition on the buffer is less (so to speak). Instead of the multitude of repetitions as in the picture above I now have 3 repetitions. The noise in the upper left corner is, however, still there.

and the background color is still black instead of red, but the repetition on the buffer is less (so to speak). Instead of the multitude of repetitions as in the picture above I now have 3 repetitions. The noise in the upper left corner is, however, still there.