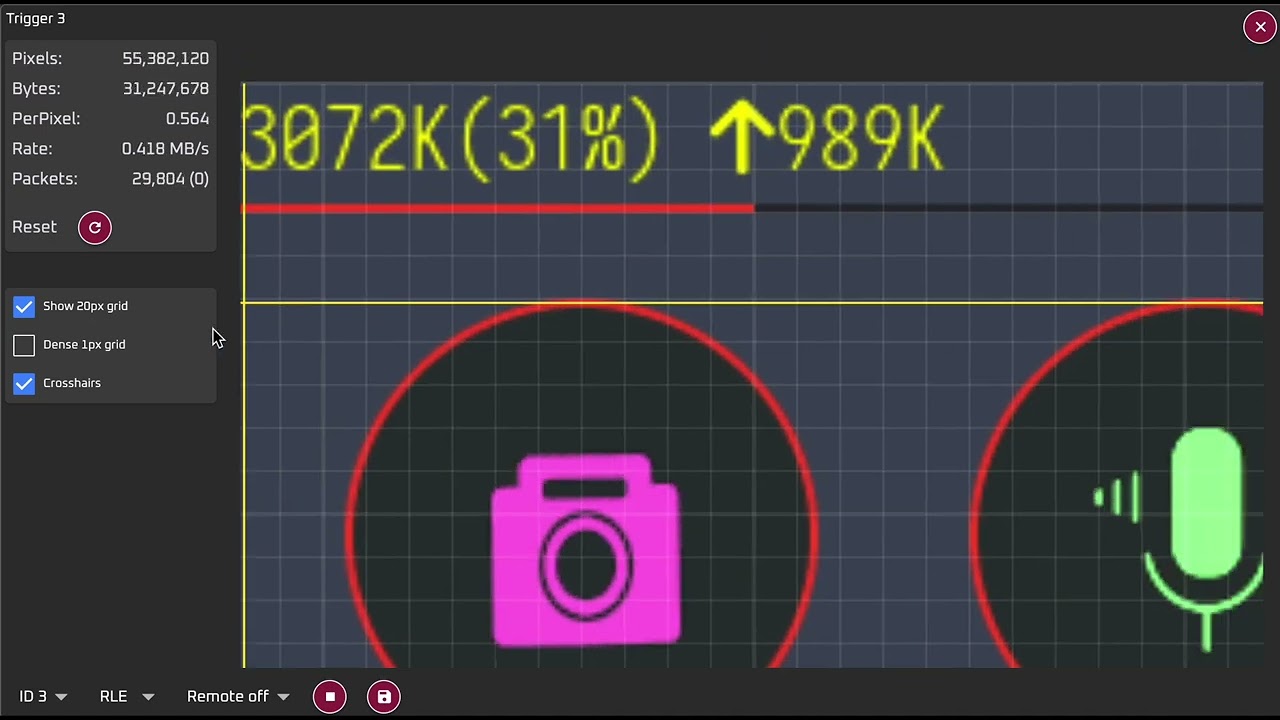

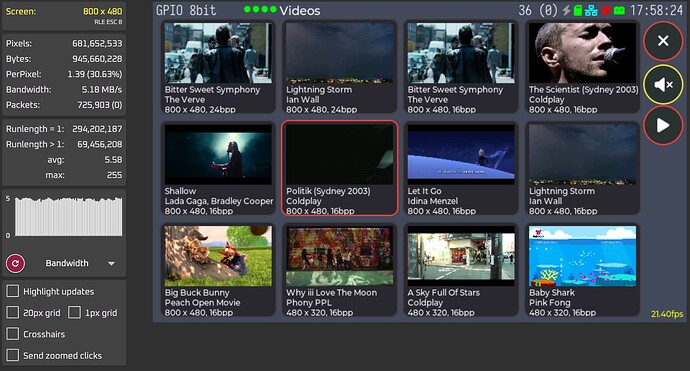

Yes, we know if it’s > 2 bytes per pixel with RLE, there is no benefit, and in fact some harm as the processing also makes it less efficient. you are stating the obvious, and no-one has made claims to the contrary. However, I specifically said its always under 2 in my use cases though, even for images and video and, for UI type screens, it’s usually around 0.4 bytes per pixel, a significant saving. My software client can choose RLE or just send 'em as you brung em, and I time the transfers and data rates, so I can see which is advantageous or not, and generally, I have found with a UI-type screen, the RLE encoding is more efficient on time, despite the extra processing - the 50-75% reduction in bytes needed to be sent makes up for it.

I’m not so sure why you keep beating this double-buffer/DMA point in this context - almost everyone I know using LVGL, and a significant number of users on the forum, barely have enough memory on their MCUs for a partial frame buffer, let alone two full-screen sized buffers with DMA transfer. The author never wrote this for that scenario, nor made that claim, and if you’ve got enough RAM for 2 full screen buffers and have DMA transfer, then you probably don’t need this form of solution. A Formula One driver doesn’t go to his local car parts dealer to get go-faster stripes…